High Scalable Stream Processing with Apache Kafka and Azure Kubernetes Service - Part I

Today, this blog attempts to share my learnings on how we can build a high scalable, near real-time stream processing with Apache Kafka and Azure Kubernetes Service. This will be a few part series, so that it will not be content heavy in one post.

We have all been there in conversations where high scalability and high availability are a common ask in any architecture design. I’ve been there—in customer meetings where the scalability of a solution towards operationalization of machine learning with DevOps has been a recent hot topic, and how can we build a solution around it.

Apache Kafka and AKS

I’ve done an extensive research especially around Kafka and Kubernetes but there was little guidance out there. So, I decided to build a solution that demonstrates the capabilities and its performance. It took me a day or two to get this working, and there were some learnings that we could learn from in this post so that this could be easily replicated.

Why should we use Kafka and AKS?

One of the reasons why I decided with this approach is the software trends around Kafka and Kubernetes. On their own, they are amazing and powerful, but together they deliver amazing capabilities and elegant solutions to difficult challenges. Kafka is used for building real-time data pipelines and streaming apps, whereas, Kubernetes is used for immutable and well versioned deployments.

Architecture Overview

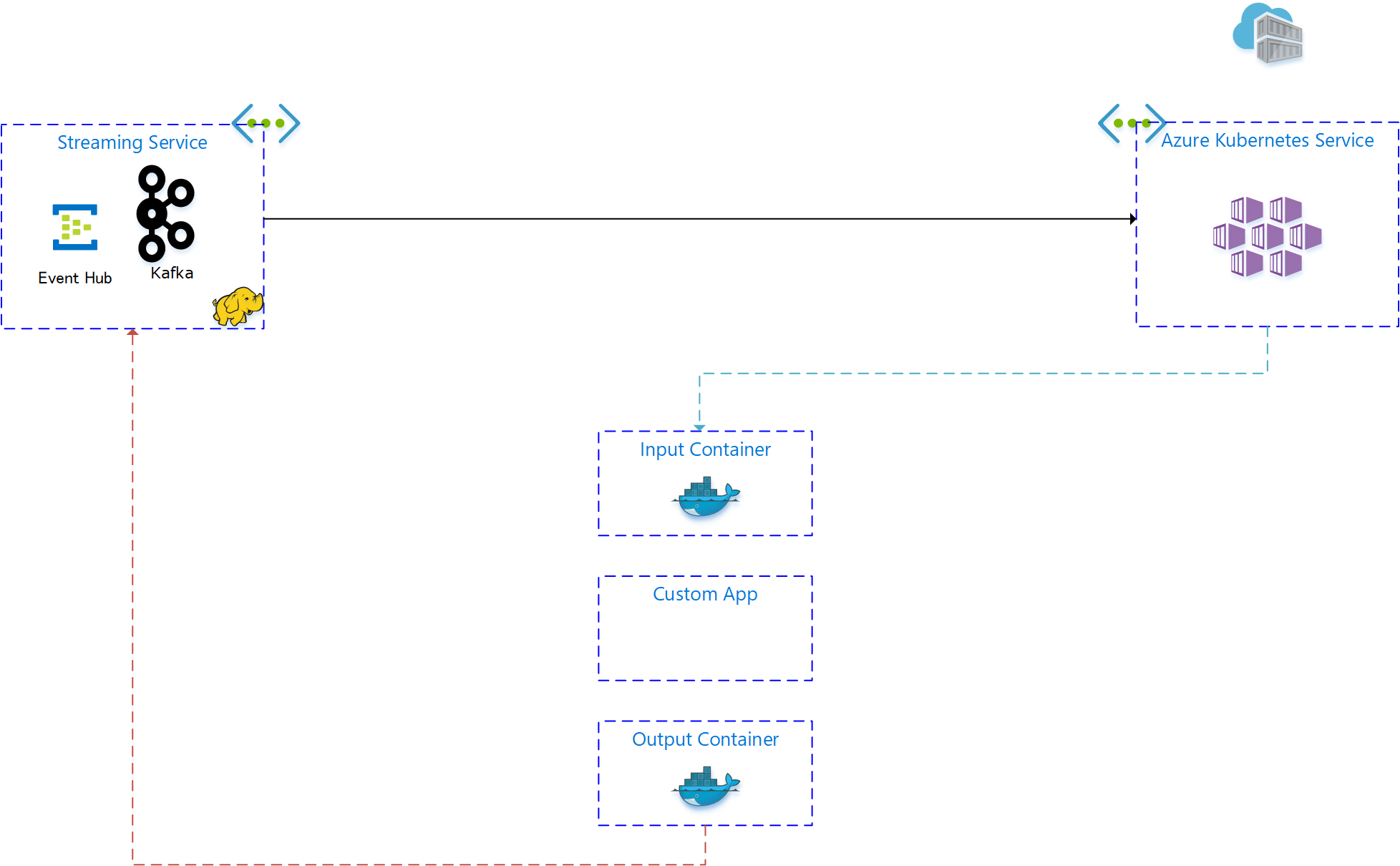

Here is an overview of the architecture.

Let’s break down the architecture for discussion. I introduce Kafka on Azure HDInsight - where you can easily run popular open source frameworks—including Apache Hadoop, Spark, and Kafka. It is a cost-effective, enterprise-grade service for open source analytics for stream processing. The alternative will be to use Azure Event Hubs, a fully managed, real-time data ingestion service that’s simple, trusted, and scalable.

We use Azure Kubernetes Service to deploy our application for a couple of reasons. Kubernetes is a production-grade container orchestration which orchestrates the containerized applications at scale. The fundamental reason is its immutable deployment. As the name suggests, it is a deployment that cannot be changed after it has been deployed. This principle is applied throughout this pipeline as it drives some good system design principles. With Kubernetes, we can introduce good versioning strategy, separated deployments, blue/green deployments, separation of concerns including routing and security. All of these can be achieved end-to-end with minimal human interaction directly with the software.

This architecture removes the need to implement connectors to various messaging services, while focusing on building the core engineering tenet of the software. The input container will receive the events from Kafka, routing it to the relevant custom application, then the output container sends the results back to Kafka.

If we are to apply this design to operationalize our machine learning strategy, data ingestion can be streamed into our input container, then our custom applications can be executed running on Tensorflow, Keras, PyTorch, R, etc. The applications will model and train the data before it sends the results back to Kafka to be served by any frontend application.

In the next part series, we will walkthrough the setup and configuration of Kubernetes. Stay tuned!

Cheers!